One Algorithm to Rule Them All

Don't Fight The Data

Pedro Domingos makes the case that ‘All knowledge – past, present, and future – can be derived from data by a single, universal learning algorithm’. He calls this learner the Master Algorithm.

Pedro highlights that even more astonishing than the breadth of applications of machine learning is that it’s the same algorithm doing all of the different things. Not only can the same learning algorithm do an endless variety of different things, but they’re shockingly simple compared to the algorithms they replace. Most learners can be coded up in a few hundred lines, or perhaps a few thousand if we add a lot of bells and whistles. In contrast, the programs they replace can run in the hundreds of thousands or even millions of lines, and a single learner can induce an unlimited number of programs.

However, there is a catch. A learner needs enough of the appropriate data to learn. And that “enough data” could in infinite. Learning from finite data requires making assumptions and different learners make different assumptions, which makes them good for some things but not for others.

The fact that one algorithm can possibly learn so many different things is evident in various fields:

1. Neuroscience: All information in the brain is represented in the same way – via the electrical firing patterns of neurons. Thus, one route – arguably the most popular one – to inventing the Master Algorithm is to reverse engineer the brain.

2. Evolution: Life’s infinite variety is the result of a single mechanism: natural selection which works through iterative search, where we solve a problem by trying many candidate solutions, selecting and modifying the best ones, and repeating these steps as many times as necessary. Evolution is an algorithm and is the ultimate example of how much a simple learning algorithm can achieve given enough data.

3. Physics: The same equations applied to different quantities often describe phenomena in different fields, like quantum mechanics, electromagnetism, and fluid dynamics. The wave equation, diffusion equation, Poisson’s equation: once we discover it in one field, we can readily discover it in others; and once we have learned it in one field, we know how to solve it in all. Moreover, all of these equations are quite simple and quite conceivably, they are all instances of a master equation, and all the Master Algorithm needs to do is figure out how to instantiate it for different data sets.

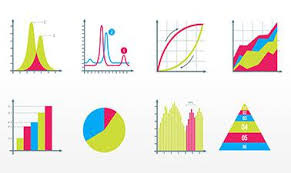

4. Statistics: According to one school of statisticians, a single simple formula underlies all learning. Bayes’ Theorem, as the formula is known, tells us how to update our beliefs whenever we see new evidence. A Bayesian learner starts with a set of hypotheses about the world. When it sees a new piece of data, the hypotheses that are compatible with it become more likely, and the hypotheses that aren’t become less likely or even impossible. After seeing enough data, a single hypothesis dominates, or a few do. Bayes theorem is a machine that turns data into knowledge.

5. Computer Science: One definition of AI is that it consists of finding heuristic solutions to NP-complete problems. Often, we do this by reducing them to satisfiability, the canonical NP-complete problem: Cn a given logical formula ever be true, or is it self-contradictory? If we invent a learner that can learn to solve satisfiability, it has a good claim to be the Master Algorithm.

Objections to Machine Learning

The most determined resistance to machine learning comes from its perennial foe; knowledge engineering. According to its proponents, knowledge can’t be learned automatically, it must be programmed into the computer by human experts. Sure, learners can extract some things from data but nothing we’d confuse with real knowledge. To knowledge engineers, data is not the new oil, it’s the new snake oil! Unfortunately, the two camps often talk past each other. They speak different languages: Machine Learning speaks probability, and knowledge engineering speaks logic.

A second objection is that no matter how smart the algorithm, there are some things it just can’t learn. It’s true that some things are predictable, and some aren’t (e.g. Black Swan events), and the first duty of the machine learner is to distinguish between them. But the goal of the master algorithm is to learn everything that can be known. Learning algorithms are quite capable of accurately predicting rare, never-before-seen events; we could even say that’s what machine learning is all about.

A related, frequently heard objection is “Data can’t replace human intuition.” In fact, it’s the other way around: human intuition can’t replace data. Intuition is what we use when we don’t know the facts, and since we often don’t, intuition is precious. But when the evidence is before us, why should we deny it? Because of the influx of data, the boundary between evidence and intuition is shifting rapidly, and as with any revolution, entrenched ways have to be overcome. If we want to be tomorrow’s authority, we should ride the data, not fight it…